To support a conjecture in the world of humans, we often point to the natural world as some kind of final arbiter. “You see, this is the way it works in nature, therefore this is the way it is.” Aesop’s fable about the Ant and the Grasshopper has been used in this way in political circles for years. The social behavior of ants and bees has also been of particular interest to those of us thinking about the complex digital social networks emerging all around us. We take the folk wisdom of Aesop as gospel, and using that tool, we make an attempt at interpretation. Ants are industrious, collective and coordinated. If only people could join together in such a natural kind of cooperation. It’s only our human foibles that prevent this return to Eden.

Meanwhile, Anna Dornhaus, a professor of ecology and evolutionary biology, has been painting ants. She does this so that she can track the individual behavior of a particular ant. Despite the anthropomorphism of Aesop’s fable, we tend to think of ants as a swarm of ants– as a collective. In a fascinating profile of Dornhaus by Adele Conover in the NY Times, we discover that:

“The specialists aren’t necessarily good at their jobs,�? said Dornhaus. “And the other ants don’t seem to recognize their lack of ability.�?

Dr. Dornhaus found that fast ants took one to five minutes to perform a task — collecting a piece of food, fetching a sand-grain stone to build a wall, transporting a brood item — while slow ants took more than an hour, and sometimes two. And she discovered that about 50 percent of the other ants do not do any work at all. In fact, small colonies may sometimes rely on a single hyperactive overachiever.

A few days ago I was re-reading Clay Shirky’s blog post on Power Laws and Blogging which describes the distribution of popularity within the blogosphere. In his book, Here Comes Everybody, he expands this idea of self-organizing systems and power law distributions to describe how things generally get done in social networks like Wikipedia. Aspects of the process have also been described by Yochai Benkler and called commons-based peer production.

Shirky’s work combined with Dornhaus’s gives you a view into the distribution of labor within the commons of a social network. Benkler’s “book” The Wealth of Networks is a play on Adam Smith’s The Wealth of Nations, and in Dornhaus’s experiments we find some interesting contrary data to Smith’s conjecture:

My results indicate that at least in this species (ants), a task is not primarily performed by individuals that are especially adapted to it (by whatever mechanism). This result implies that if social insects are collectively successful, this is not obviously for the reason that they employ specialized workers who perform better individually.

As Mark Thoma notes, Adam Smith cites three benefits from specialization:

- The worker would become more adept at the task.

- The time saved from not changing tasks.

- With specialization, tasks can be isolated and identified, and machinery can be built to do the job in place of labor.

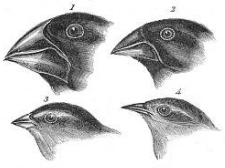

As we begin to think about the characteristics of “swarming behavior” within digital networks, we can now start to “paint the ants” and look much more closely at how things get done within the swarm. Digital ants may all behave identically, but ants as we find them in nature behave unpredictably. Rilke notes that “we are the bees of the invisible,” but is a bee simply a bee?

Comments closed